Academically speaking, it seems that the most common interpretation of intelligence is that it is something that is both (a) quantifiable, and (b) strongly correlated with a person's likelihood of achieving particular types of education and/or employment. In other words, statistics and practical measurements are considered extremely important.

However, in circles that are more philosophical (and frequently political), there doesn't seem to be a "most common" interpretation at all, but rather, the endless dance of perpetual debate. There are those who see it as a no-brainer that "intelligence" must require self-awareness, and those who believe that this is obviously not the case. There are those who equate intelligence with g, which can supposedly be measured using IQ tests -- and those who see IQ tests as outmoded hokum, and "g" as an oversimplification at best. Thankfully, most of Saturday's speakers managed to discuss intelligence in the context of particular topical domains, setting up important terms at the start of each of their talks -- and giving the overall impression of a loose coalition of thinkers all grasping at parts of something too large for any one of us to grok in fullness. Happily, most (if not all) of them seemed cogent of this fact.

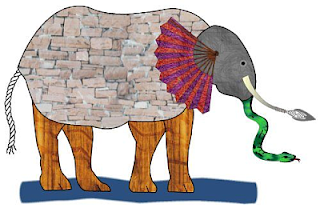

The idea of a general intelligence presupposes, perhaps, an AI's ability to learn pretty much anything a human might be able to conceive of learning (and perhaps more, based on its own priorities and capacities), but in practice, there are likely to be different "flavors" of general machine intelligences. We humans like to call ourselves "generalists", and the fact that humans are capable of learning new skills independent of absolute hard-coding for those specific skills indicates that this is true to some extent, but really, our intelligence is quite narrow if you look at it from the perspective of the grand scheme of all possible minds. Most people may be able to learn most things people can imagine wanting to do, however, it is clear that different humans are optimized to find different things easier or more difficult.

Additionally, we all specialize over time; a particular person might be equally capable of earning a degree in business, graphic design, or basket-weaving, but he is not likely to simultaneously major in all three. And even the most cursory glance at the spectrum of human abilities reveals variations in how these abilities are "weighted" from person to person. A video by a friend of mine demonstrates how some abilities that might seem conceptually similar (in this case, the ability to identify auditory tones as compared to the ability to identify colors), but which do not often manifest simultaneously; most sighted people can easily distinguish between red and blue, but very few can identify a "C" or "A flat" note in the absence of a reference tone. So I remain skeptical of the very idea of "general intelligence", at least in the sense that most people probably think of it (which is probably heavily weighted toward the range of stuff that a particular person finds comprehensible).

But: clearly, some people believe that they have some workable conceptualization of a "general intelligence" model. A fair percentage of them were probably in the Palace of Fine Arts the weekend of this year's Summit.

Personally, I think that the best way to start a discussion about intelligence is to put it in terms of a "black box". We can't pretend that we know exactly how the brain -- any brain -- understands things. Though neuroscience can describe some aspects of cognition, we still don't know enough to design and construct an artificial, thinking mind based on human models. What we do know is that when you combine a person with an environment, it is the person's brain that allows that person to process the information she acquires through her senses, and to further incorporate that information into her internal model of how reality works.

This being the case, I appreciated Eliezer Yudkowsky's slide depicting a brain along with various inventions dreamed up and made reality by humans throughout the ages. Whether or not you agree that "intelligence" is that thing which has allowed the creation of space shuttles, skyscrapers, and projectile weapons, it is at least apparent when shown such a slide that for the purposes of ensuing discussion, this is what is meant by "intelligence" (or at least "cognitive factors"). Yudkowsky also displayed a slide showing the fallacy of trying to judge AI development on a purely human-centric scale. Whatever you might say about Yudkowsky (and I don't know him well enough to say very much at all, and even if I did, I have no interest in or taste for gossip), one thing I do appreciate about his usual approach to the subject of intelligence is that he seems to think very much in terms of a broad spectrum of potential minds.

In his closing statements, Yudkowsky emphatically pleaded with the audience to, "for the love of cute kittens", define the term singularity if they were going to use it at all. I would say that it is perhaps more important to define your conceptualization of intelligence. After all, it's difficult to coherently discuss what you mean by an "intelligence explosion" if you haven't made it clear what, exactly, you expect to be exploding!

The next two presentations offered other, variant "flavors" of the interpretation of intelligence, along with different example AGI applications.

The Practical Case for AGI

Next, Barney Pell, PhD., presented on Pathways to Advanced General Intelligence: Architecture, Development, and Funding. He didn't offer a concise definition of "intelligence" from his perspective up-front, however, he did suggest "able to perform the kinds of jobs that high school students are generally hired for" as a marker of significant progress in the development of true AGI.

According to Pell, the "AI research community" (I'm not sure who comprises this community -- I'm guessing it depends on who you ask) holds a consensus that we will probably have such an AI within 100 years. Pell noted that there are a number of probable major milestones and accomplishments that must precede the development of an AI with "worker"-level capacity or greater. Pell pointed out, though, that not all AI models will necessarily be human-inspired -- he presented a graph showing "development" on one (y) axis and "architecture" on the other (x). Theoretical projections as to the nature of possible "created" minds span a potential space that includes everything from human babies to highly advanced computers that do not resemble humans in either their developmental style or their construction.

Success has been functionally nil in the area of artificial general intelligence, and many people have gravitated toward "narrow" AI applications -- understandable, since companies have to watch their profit margin in order to stay afloat, and frequently frown on ambitious R&D with highly uncertain projected outcomes. As Pell pointed out, not only is AGI "hard", companies have many incentives to focus on "incremental research and low-hanging fruit". And regardless of their limitations, specialized AI systems do often provide outstanding performance in the particular area for which they were designed -- Deep Blue might not be able to learn games other than chess, but it does play a good game of chess. Since most companies focus on a particular product or product line, "narrow AI" might be all they ever need.

Nevertheless, suggests Pell, it is possible that AGI might actually become "essential to product value" if developed. Military scientists are understandably intrigued by the prospect of AGI-enhanced robots and other systems; as Pell pointed out, the nature of warfare itself has changed in recent years, and "the rules of combat change all the time". An autonomous system capable of recognizing and responding appropriately to these rule-changes would no doubt benefit from AGI (the question of whether humanity would benefit from such applications is another discussion altogether).

More commercially promising (and less controversial) near-term potential applications of AGI include video games and virtual worlds (in which, according to Pell, AI is now becoming tremendously important to gameplay, above and beyond graphics), along with natural language search and conversational Web and computer interfaces.

As an example of a practical application likely to benefit from (and drive) AI research, Pell showed a few slides on the in-development Powerset -- an ambitious project with the goal of "building a natural language search engine that reads and understands every sentence on the Web." Language is a very promising area for AI, consdering how complex and context-dependent it is when humans use it -- any program capable of processing and understanding language would by necessity have to be incredibly sophisticated and flexible.

This discussion thread led to a question during Pell's Q&A session about the possibility of voice interface eventually "replacing" text altogether. I am always vaguely disturbed whenever I hear people bringing up this notion -- it seems like a no-brainer that it's better for people to have more communication choices and methods of interacting with their favorite machines than less.

People who postulate a supposedly ideal "voice in, voice out" computing and communication paradigm must never have worked in a cubicle-based office -- I need noise-cancelling headphones on the basis of people's hallway and phone conversations alone where I work, and I can't even fathom the horror of working in an office where everyone is yammering out loud at their computers all day long, with their machines duly yammering back! Not to mention the fact that there are various populations of persons in the world who prefer or even require text-based interfaces -- I shudder to imagine a future in which basic keyboards are relegated to the status of "special need".

In light of this, I was pleased to see Pell reminding Summit attendees of the fact that auditory strings are by nature serial, and therefore much slower to search than text strings. While natural language processing (fueled by AGI) may indeed improve voice interfaces tremendously, I shudder to imagine a future in which constant, ubiquitous chatter and illiteracy become the norm. Thankfully, I seriously doubt this future will ever actually come about, seeing as there are so many obvious example cases where either text or voice is the better medium for information storage or communication. It just surprises me that anyone would even bother postulating a voice-only future.

Near the conclusion of his presentation, Barney Pell addressed the question of why he supports the Singularity Institute for Artificial Intelligence -- basically, while he recognizes that it may be impossible to predict precisely when "profound change" starts to come about as a result of AI and other technological developments, he sees the need to discuss the matter sooner rather than later. He suggested that in the future, "people will thank futurists" (presumably, for at least attempting to open up the topic space surrounding key potential tech-driven changes). Given the (mixed, at best) track record of "futurists" throughout the ages I can't say whether I necessarily agree with Pell on this point.

Overall, Pell's presentation was very much grounded in the "here and now", and his examples of military, gaming, and language-processing applications for AI definitely made a compelling case for the idea that AI is far from being a mere science fiction pipe dream. If AGI can be developed, it does seem that the infrastructure exists through which it may, within the next few decades or so, be developed.

I Give You Your Faults

"Meg, I give you your faults."

"My faults!" Meg cried.

"Your faults."

But I'm always trying to get rid of my faults!"

"Yes," Mrs. Whatsit said. "However, I think you'll find they come in very handy on Camazotz."

From, "A Wrinkle in Time" by Madeline L'Engle

IBM engineer Sam Adams probably had one of the most compelling presentation titles at the entire event -- he spoke on Superstition and Forgetfulness – Two Essentials for Artificial General Intelligence. Fittingly, the presentation itself turned out to be one of the more technical (and critical) talks at the Summit. Adams approached AI from a very human-centered perspective, stating at the beginning of his talk that "the only known pathway to human level intelligence is the one that we ourselves have traveled". He introduced the audience to the Joshua Blue project -- a currently on-hold IBM effort to develop an artificial mind based on what we know about how human minds develop starting in early infancy. Unlike Deep Blue -- IBM's earlier celebrated contribution to the world of AI -- Joshua Blue is intended to be capable of learning in multiple domains.

Adams presented a view of intelligence (or perhaps more properly, of the cognitive faculties that humans tend to associate with "intelligence") that emphasized subjective experience and internal representation as essential features of a practical brain-based AI model. His presentation title, which refers to "superstition and forgetfulness", is an engineer's invocation of traits that (at first glance) appear to be faults, but that may in fact be critical to producing a system that can learn. Despite the impressive facility of Deep Blue at playing chess, and of our desktop computers at performing a variety of useful processing feats, Adams pointed out that "no computer [yet] has the common sense of a six-year-old child". Common sense, according to Adams, is intimately bound to the experience of a common sensation, which produces a common experience.

A bit of a personal aside here: while growing up, I was very often told that I "lacked common sense". I wasn't exactly sure what this meant, since nobody ever seemed able to precisely define what common sense was -- it was just something I was expected to have, something that would somehow intangibly guide me toward choices that would please adults, help ensure my personal safety, etc. Looking back, it now seems fairly obvious that the reason I seemed to lack "common sense" was because I was, in fact, not having what Sam Adams would define as a "common experience" with those around me. I was constantly swimming in a sea of details, perceiving the smallest parts of objects around me very directly (my parents recall me being curious about everything from the holes in the telephone receiver to the composition of human hair). Everything was very loud, very bright, and highly textured.

Of course, I thought this was perfectly normal; like most humans, I figured that the way I saw the world was the way everyone saw the world. Therefore, my supposed "lack of common sense" (which is, I guess, what prompted me to do things like climb shelves, crawl into the refrigerator, and ask "obvious" questions) remained a mystery to me for years. In light of this, I think that would-be AI-builders should be careful of defining "common experience" in too narrow a fashion; neurotype, culture, native language, and other distinguishing factors can all contribute toward a person's experience having more or less in common with that of others.

Another one of Adams' important subjective experience factors is time-consciousness. As he stated, our cognition is "time-bound", meaning that a mind processing information at rates orders of magnitude above human speeds (which, though I'm sure they vary, still fall within a relatively narrow range) would experience reality in ways that no human could even begin to imagine. This makes sense -- after all, the faster a mind can process information, the slower everything around him seems to be happening; if you've ever experienced nearly falling off a cliff, or nearly getting into a car accident, you are probably familiar with the sense of being in slow-motion that occurs in such situations.

This effect has been studied; a bungee jumper was found to be able to read numbers on a digital display in mid-jump more accurately than if he'd been sitting safely on solid ground. If you could perceive everything even at that level of time-consciousness all the time, it is very likely that your entire experience would be dramatically different than it is at present. This sort of thing is, of course, really only worth thinking about if (a) you are trying to build a computer that thinks like a human, and (b) if you think it is important for AIs to be able to "relate" to humans in particular ways. And Adams' premise meets both those criteria.

If successful, Joshua Blue will effectively emulate the "bootstrapping of the human mind" as it is thought to occur between early infancy and about three years of age. Adams described how this process depends upon understanding various architectural cues from human development -- we know, for instance, that the density of neurons in the brain is approximately the same at birth as in adults, and that it is the connectivity of these neurons that changes as a person learns and grows. Basic neuron theory explains brain connectivity and learning as the process by which synaptic connections develop as neurons fire -- when the neurons firing are close enough together, you get synapse formation. However, brain research has shown that synaptic connectivity changes drastically at various points in development, and that the breaking of certain connections ("forgetting") is part of how the brain optimizes itself as the person grows. Hence, the assertion that "forgetting" is therefore essential for artificial intelligence -- you only want the AI to maintain connections that are actually useful, as opposed to those that serve merely to distract. Some of this "forgetting" happens incidentally, but Adams also referred to what he called "global plasticity events", in which connections break or rapidly rearrange at various key developmental stages.

Because of my interests in both autism and neurology in general, I've done a fair bit of reading on the subject of child development. Some studies suggest that "global plasticity events" occur differently in autistic people than in neurotypical people, and when Adams started talking about the "pruning" process that occurs to keep children focused on particular things in their environment, I couldn't help but wonder if anyone has yet considered making a neuro-atypical AI. Maybe it would be easier to create a "non-NT" AI, or maybe it would be more difficult, but in any case, I think anyone even considering creating an artificial brain ought to become well versed in the research suggesting that not all brains accomplish the same tasks the same way. Otherwise, I think there's a real risk of missing true progress in this field, since someone might very well create an artificial mind that they end up dismissing as "not a mind at all" simply because they don't understand what it is doing and how it is doing it. Just my 2 cents.

Continuing onward, Adams explained what he meant by "superstition" in his title -- that is, the fact that people are generally able to believe certain things prior to fully understanding them. When someone observes two or more events occuring coincidentally for the first time, she has no way of knowing for certain whether this coincidence represents an important pattern or simple chance. So the brain, in light of this, will store the fact that the events coincided in the interest of using this information to produce predictive models.

One visualization of this concept might be (and this is my example, not Adams') a case in which someone pushes a button, and then a light goes on in the room he is in. He won't know the first time whether the button press actually caused the light to go on, but his brain will record the fact that the light coincided with the button press -- in other words, the brain will open itself up to the possibility that pressing the button caused the light to go on. If the person really wants to know what the relationship is between the light and the button, he can now proceed with further experimentation using his brain's new predictive model -- he at least suspects that the button might have something to do with the light.

An AI capable of learning in a real environment (in, as Adams suggests, "a variety of embodiments") must be able to form similar models in order to make sense of that environment. That is, it should be (as the brain is) able to note coincidences and store them ("superstitions") to streamline the process of predicting probable future events and figuring out causality. Of course, we've all seen this process go haywire in humans. Medical quackery is alive and well even in the 21st century, despite all our advances in scientific understanding, probably because many people have an easier time processing their own direct experience as opposed to dry stacks of clinical statistics. This is not to say that there is no such thing thing as overuse of statistics and inappropriate dismissal of direct experience -- there certainly is, but my point is that the key here is getting to the right information. And getting to the right information requires knowing when to prioritize which data from which source. Clearly, along with giving an AI the ability to associate coincident events, AIs must also be equipped with a critical thinking engine of some sort -- otherwise, we could end up with computers demanding their very own tinfoil hats!

I mentioned earlier that the Joshua Blue project is currently on hold. According to Sam Adams, IBM does not think that the project is worth continuing until better models, high-fidelity virtual worlds, and numerous "high-fidelity core processing systems" become available. Despite tremendous improvements in computing hardware over the past few years, Adams suspects that current hardware is still not up to par for Joshua Blue, and would prefer to wait for more appropriate hardware even if the project were fully funded at the moment. In conclusion, however, Adams reiterated his belief that in order to achieve AGI, the best we can do is "follow the child".

This has been Part 2 of a multi-part series of articles on the 2007 Singularity Summit