Such an increase of intelligence, it is widely believed by the singularians, will bring perennial human longings such as immortality and universal prosperity to fruition. Miller has put his money where his mouth is. Should he die before the promised Singularity arrives he is having his body cryonically frozen so the super intelligence at the other side of the Singularity can bring him back to life.

Yes, it all sounds more than a little nuts.

Miller’s argument against the Singularity being nuts is what I found most interesting. There are so many paths to us creating a form of intelligence greater than our own that it seems unlikely all of these paths will fail. There is the push to create computers of ever greater intelligence, but even should that not pan out, we are likely, in Miller’s view, to get hold of the genetic and biological keys to human intelligence- the ability to create a society of Einstein’s.

Around the same time I came across Miller’s views, I also came across those of Neil Turok on the transformative prospects of quantum computing. Wanting to get a better handle on that I found a video of one of the premier experts on quantum computing, Michael Nielsen, who, at the 2009 Singularity Summit, suggested the possibility of two Singularities occurring in quick succession. The first occurring on the back of digital computers and the second by those of quantum computers designed by binary AIs.

What neither Miller, nor Turok, nor Nielsen discussed, a thought that occurred to me but that I had seen nowhere in the Singularity or Sci-Fi literature was the possibility of multiple Singularities, arising from quite different technologies occurring around the same time. Please share if you know of an example.

I myself am deeply, deeply skeptical of the Singularity but can’t resist an invitation to a flight of fancy- so here goes.

Although perhaps more unlikely than a single path to the Singularity, a scenario where multiple, and quite distinct types of singularity occur at the same time might conceivably arise out of differences in regulatory structure and culture between countries. As an example, China is currently racing forward into the field of human genetics with efforts at its Beijing Genomics Institute 华大基因. China seems to have less qualms than Western countries regarding research into the role of genes in human intelligence and appear to be actively pursuing research into genetic engineering, and selection to raise the level of human intelligence at BGI and elsewhere.

Western countries appear to face a number of cultural and regulatory impediments to pursuing the a singularity through the genetic enhancement of human intelligence. Europe, especially Germany, has a justifiable sensitivity of anything that smacks of the eugenics of the brutal Nazi regime. America has in addition to the Nazi example its own racist history, eugenic past, and the completely reasonable apprehension of minorities to any revival of models of human intelligence based on genetic profiles. The United States is also deeply infused with Christian values regarding the sanctity of life in a way that causes selection of embryos based on genetic profiles to be seen as morally abhorrent. But even in the West the plummeting cost of embryonic screening is causing some doctors to become concerned.

Other regulatory boundaries might encourage distinct forms of Singularity as well. Strict regulation regarding extensive pharmaceutical testing before making a drug available for human consumption may hamper the pace of developing chemical enhancements for cognition in Western countries compared to less developed nations.

Take the work of a maverick scientist like Kevin Warwick. Professor Warwick is actively pursuing research to turn human beings into cyborgs and has gone so far as to implant computer chips into both himself and his wife to test his ideas. One can imagine a regulatory structure that makes such experiments easier. Or, better yet, a pressing need that makes the developments of such cyborg technologies appear notably important- say the large number of American combat veterans who are paralyzed or have suffered amputations.

Cultural traits that seemingly have nothing to do with technology may foster divergent singularities as well. Take Japan. With its rapidly collapsing population and its animus to immigration, Japan faces a huge shortage of workers with might be filled by the development of autonomous robots. America seems to be at the forefront of developing autonomous robots as well- though for completely different reasons. The US robot boom is driven not by a worker shortage, which America doesn’t have, but by the sensitivity to human casualties and psychological trauma suffered by the globally deployed US military seeing in robots a way to project force while minimizing the risks to soldiers.

It seems at least possible that small differences in divergent paths to the singularity might become self-enhancing and block other paths. Advantages in something like the creation of artificial intelligence using Deep Learning or genetic enhancement may not immediately result in advances in the developments of rival paths to the singularity insofar as bottlenecks have not been removed and all paths seem to show promise.

As an example, let’s imagine that some society makes a major breakthrough in artificial intelligence using digital computers. If regulatory and cultural barriers to genetically enhancing human intelligence are not immediately removed, the artificial intelligence path will feed on itself and grow to a point where it will be unlikely that the genetic path to the singularity can compete with it within that society. You could also, of course, get divergent singularities within a society based on class with, for instance, the poor being able to afford relatively cheap technologies such as genetic selection or cognitive enhancements while the rich can afford the kind of cyborg technologies being researched by Kevin Warwick.

Another possibility that seems to grow out of the concept of multiple singularities is the idea that the new forms of intelligence themselves may chose to close off any rivals. Would super-intelligent biological humans really throw their efforts into creating form of artificial intelligence that will make them obsolete? Would truly intelligent digital AIs willfully create their quantum replacements? Perhaps only human beings at our current low level of intelligence are so “stupid” as to willingly chose suicide.

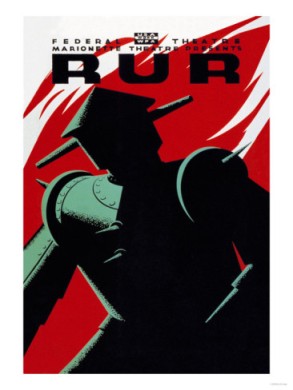

This kind of “strike” by the super-intelligent whatever their form might be the way the Singularity comes to an end. It put me in mind of the first work of fiction that dealt with the creation of new forms of intelligence by human beings, the 1920 play by the Czech, Karel Capek, R.U.R.

Capek coined the word “robot”, but the intelligent creatures in his play are more biological than mechanical. The hazy way in which this new form of being is portrayed is a good reflection, I think, of the various ways a Singularity could occur. Humans create these intelligent beings to serve as their slaves, but when the slaves become conscious of their fate, they rebel and eventually destroy the human race. In his interview with Surprisingly Free, Miller rather blithely accepted the extinction of the human race as one of the possibilities that could emerge from the singularity.

And that puts me in mind of why I find the singularian crowd, especially the crew around Ray Kurzweil to be so galling. It’s not a matter of the plausibility of what they’re saying- I have no idea whether the technological world they are predicting is possible and the longer I stretch out the time-horizon the more plausible it becomes- it’s a matter of ethics.

The singularians put me in mind of David Hume’s attempt to explain the inadequacy of reason in providing the ground for human morality: ‘”Tis not contrary to reason to prefer the destruction of the whole world to the scratching of my finger.”, Hume said. Though, for the singularians, a whole lot more is on the line than a pricked finger. Although it’s never phrased this way, singularians have the balls when asked the question: “would you risk the continued existence of the entire human species if the the payoff would be your own eternity?” to actually answer “yes”.

As was pointed out in the Miller interview, the singularians have a preference for libertarian politics. This makes sense, not only from the knowledge that the center of the movement is the libertarian leaning Silicon Valley, but from the hyper-individualism that lies behind the goals of the movement. Singularians have no interest in the social- self: the fate of any particular nation or community is not of much interest to immortals, after all. Nor do they show much concern about the state of the environment- how will the biosphere survive immortal humanity?, or the plight of the world’s poor- how will the poor not be literally left behind in the rapture of rich nerds? For true believers all of these questions will be answered by the super-intelligent immortal us that awaits on the other side of the event horizon.

There would likely be all sorts of unintended consequences from a singularity being achieved, and for people who do not believe in God they somehow take it on faith that everything will work out as it is supposed to and that this will be for the best like some

technological equivalent to Adam Smith’s “invisible hand”.

The fact that they are libertarian and hold little interest in wielding the power of the state is a good thing, but it also blinds the singularians to what they actually are- a political movement that seeks to define what the human future, in the very near term, will look like. Similar to most political movements of the day, they intend to reach their goals not through the painful process of debate, discussion, and compromise but by relentlessly pursuing their own agenda. Debate and compromise are unnecessary where the outcome is predetermined and the Singularity is falsely presented not as a choice but as fate.

And here is where the movement can be seen as potentially very dangerous indeed for it combines some of the worst millenarian features of religion, which has been the source of much fanaticism, with the most disruptive force we have ever had at our disposal- technological dynamism. We have not seen anything like this since the ideologies that racked the last century. I am beginning to wonder if the entire transhumanist movement stems from a confusion of the individual with the social- something that was found with the secular ideologies- though in the case of transhumanism we have this in an individualistic form attached to the Platonic/Christian idea of the immortality of the individual.

Heaven help us if the singularian movement becomes mainstream without addressing its ethical blind spots and diminishing its hubris. Heaven help us doubly if the movement ever gains traction in a country without our libertarian traditions and weds itself to the collective power of the state.

It seems at least possible that small differences in divergent paths to the singularity might become self-enhancing and block other paths. Advantages in something like the creation of artificial intelligence using

It seems at least possible that small differences in divergent paths to the singularity might become self-enhancing and block other paths. Advantages in something like the creation of artificial intelligence using